What’s the difference between Artificial Intelligence, Machine Learning and Deep Learning?

Artificial intelligence has held a place in our imagination from the beginning of the XX century. Already in the 1930s and 1940s, the pioneers of computing such as Alan Turing began formulating the basic techniques like neural networks that make today’s AI possible.

Today, AI is already all around us. Google uses Machine Learning to filter out spam messages from Gmail. Facebook trained computers to identify specific human faces nearly as accurately as humans do. Deep Learning is used by Netflix and Amazon to decide what you want to watch or buy next.

Ok. So you hear these buzzwords almost every day but what is the actual meaning of Artificial Intelligence, Machine Learning, and Deep Learning… Are these terms related and overlapping? What’s the technology behind it? And what are their applications in the geospatial industry?

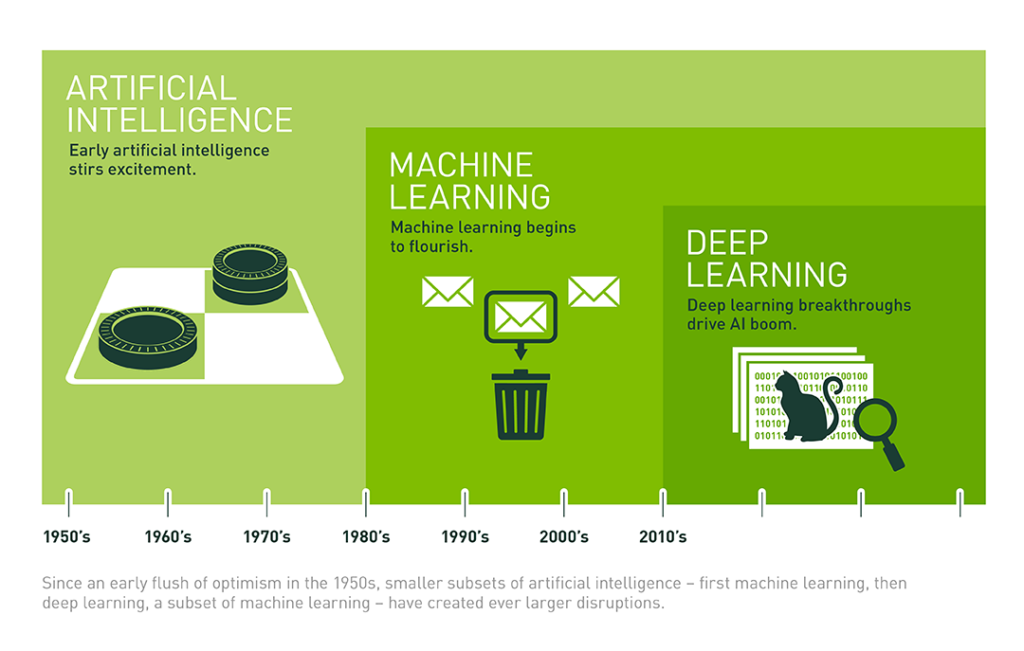

Deep Learning is a domain of Machine Learning and they are state-of-the-art approaches of AI (source: NVIDIA Blog)

Artificial Intelligence is the broad umbrella term for attempting to make computers think the way humans think, be able to simulate the kinds of things that humans do and ultimately to solve problems in a better and faster way than we do. The AI itself is a rather generic term for solving tasks that are easy for humans, but hard for computers. It includes all kinds of tasks, such as doing creative work, planning, moving around, speaking, recognizing objects and sounds, performing social or business transactions, etc.

Researchers tried many different approaches to creating AI, but today the only area that brings promising and relevant results is called Machine Learning. The idea behind it is fairly simple. Rather than programming computers to be smart by hand-coding software routines with a specific set of instructions to accomplish a particular task, you give machines access to a large number of sample data and code them to find patterns and learn on their own how to perform the task.

In 2007, researchers at Stanford’s Artificial Intelligence Lab decided to give up on trying to program computers to recognize objects and took a different approach. They’ve created a project called ImageNet that aimed to label millions of raw images from the Internet in with a level of detail similar to what a child can recognize at the age of three. Then they’ve started to feed them to so-called convolutional neural networks set up on powerful processing machines. By being shown thousands and thousands of labeled images with instances of e.g. a cat, the machine could shape its own rules for deciding whether a particular set of digital pixels was, in fact, a cat.

Machine Learning is built based on algorithmic approaches that over the years included decision tree learning, inductive logic programming, clustering, reinforcement learning, and Bayesian networks among others. But only the developments in the area of neural networks, which are designed to work by classifying information in the same way a human brain does, allowed for such a breakthrough.

Although Artificial Neural Networks have been around for a long time, only in the last few years the computing power and the ability to use vector processing from GPUs enabled building networks with much larger and deeper layers than it was previously possible and it brought amazing results. Although there is no clear border between the terms, that area of Machine Learning is often described as Deep Learning.

In the most basic terms, Deep Learning can be explained as a system of probability. Based on a large dataset you feed to it, it is able to make statements, decisions or predictions with a degree of certainty. So the system might be 78% confident that there is a cat on the image, 91% confident that it’s an animal and 8% confident it’s a toy. Then you can add on the top of it a feedback loop, telling the machine whether it decisions were correct. That enables learning and possibility to modify decisions it takes in the future.

So what are the applications of AI, ML and DL in the geospatial industry?

One of the most useful applications of Machine Learning for the geospatial industry is image recognition. The systems, trained with thousands on images to detect particular object, learns which pattern of pixels is associated with expected result. This technology can be applied on many different levels and has huge impact on the industry.

A startup called Orbital Insight uses Machine Learning to make sense of satellite imagery:

PwC is applying Neural Networks to drone-based areal imagery for construction monitoring:

Machine Learning is a key enabler of autonomous driving technologies:

But image recognition is just a part of the game. Machine learning and other AI techniques have been transforming many areas in the geospatial industry. In particular, areas that require analyzing location-based Big Data for pattern recognition, forecasting, and data modeling.

The more data (and geospatial data) we generate the more help with understanding and interpreting it we need. The potential of AI techniques for our industry is huge and we should not be afraid of it. Within the next two-three decades, the majority of simple, manual tasks related to surveying, map making, GIS, and remote sensing will be done automatically or by robots, making our life easier but the majority of the conceptual work will still have to be done by humans. I believe that in our industry, the loss of jobs caused by automation will be balanced by the new services enabled by the innovations.

Similar to the industrial revolution or the digital revolution, the AI revolution is sure to pave the way for some significant changes in our lives. Machines will gradually improve, slowly replacing jobs that require repetitious behavior. But what happens when one day the machines become smarter than us? There is no good answer to that question…

According to Ito World

According to Ito World