How we run OpenStreetMap service in Azure

Architecture, trade-offs and cost — what, why and how much.

As a .NET developer I tend to fall into the trap of approaching every software problem thinking how I will do it with C#/ASP.NET/MSSQL. As I am trying to break this habit, StopByStop project was as of yet my most valuable experience that forced me to think outside of the familiar toolbox.

When I first started prototyping the solution to show the highway exits and places of interest around them on the route from A to B for all US destinations — what eventually became StopByStop, I predictably started searching StackOverflow for samples to do “C# maps routing” which only took me so far. I was lucky to work with a really smart developer with OSM and GIS experience who introduced me to PostgreSQL and PostGIS and wrote the initial version of the backend.

Which was a great start, but I still had to improve scalability and host the service. After trying and rejecting one of cheap shared PostGIS hostings, I decided that while the best long-term solution would be to manage our own servers, in the short term Azure with its ability to resize instances was the best way to go.

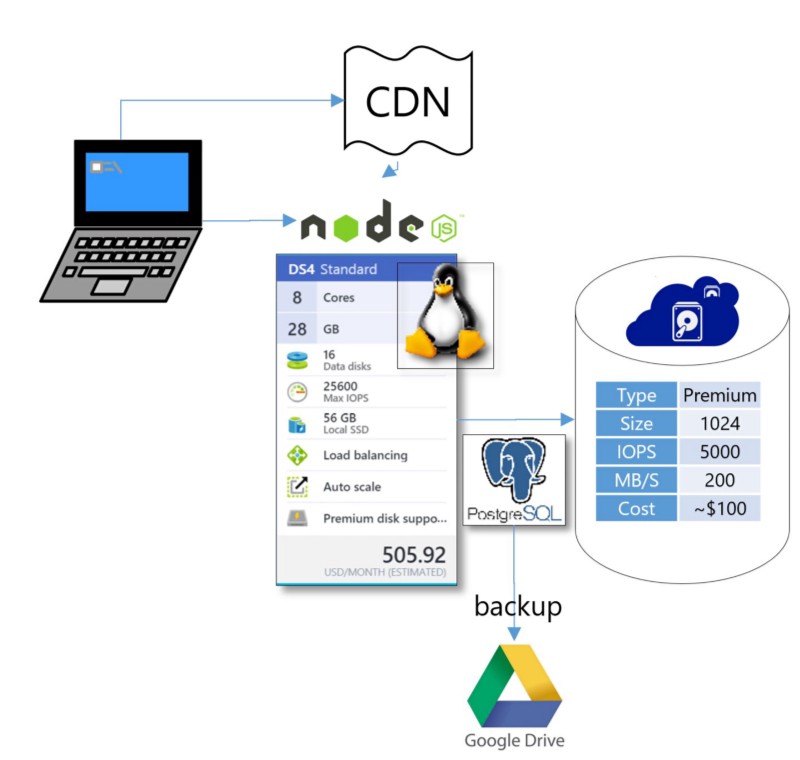

Linux VM with PostgreSQL

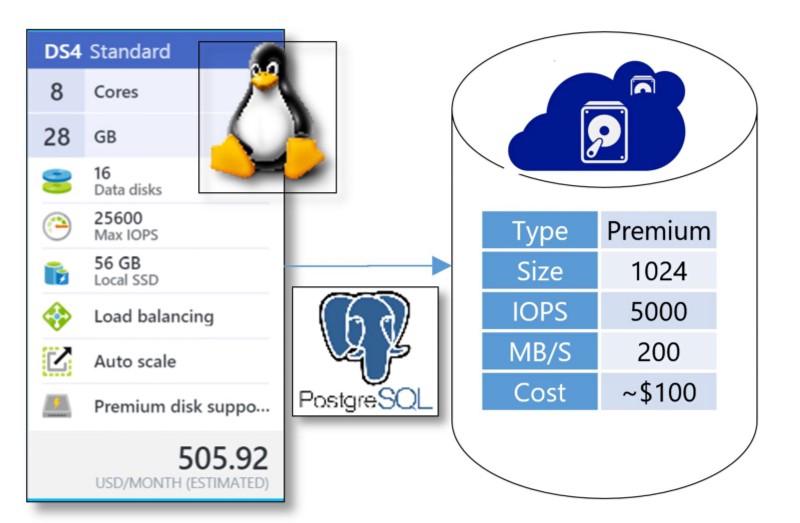

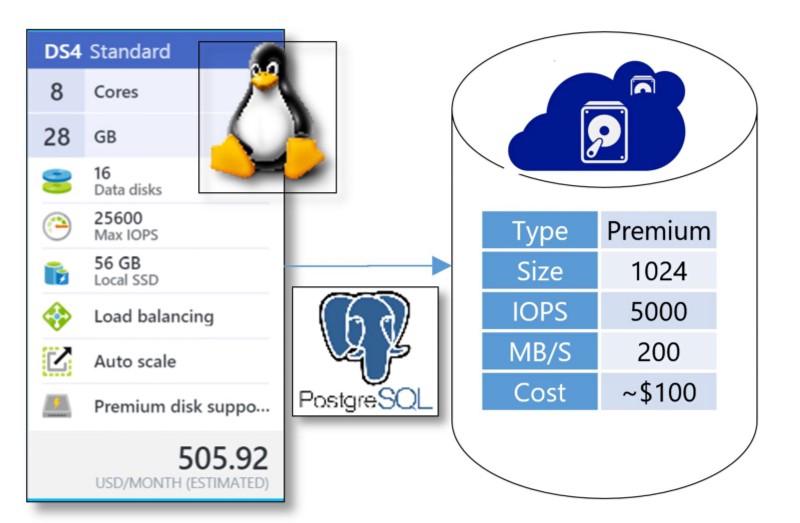

I experimented with various Linux VM sizes on Azure and eventually settled with DS4 for VM and 1GB storage for the database.

The CPU ended up to be the bottleneck for query execution. Since PostgreSQL cannot use multiple cores to execute a single query, searching for exits and places of interest along the long route involved scanning multi-million size indices on several tables. My approach was to break long routes into shorter chunks which resulted in some loss of accuracy, but allowed me to launch up to 8 sub-queries simultaneously for every customer query.

The goal was to execute any uncached query in under 12 seconds on the DB side which may sound as not ambitious enough goal, but given that intended scenario for our service is for local destinations, we are hoping that in most scenarios customers will see their timeline appear much faster.

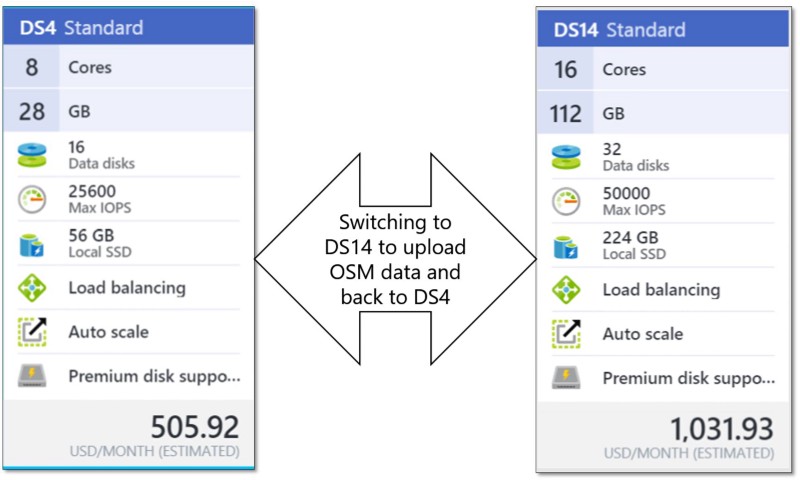

Azure VM resizing comes in handy for OSM data upload

So while for regular operation DS4 is enough, as I found out that to upload OSM data into database without spending several weeks on it, I need a bigger machine: DS14.

This deserves a separate blog post, but in a nutshell, uploading OSM data to PostgreSQL involves

- osm2pgsql that in my case actually doesn’t take that long and there is a lot of helpful tips on optimizing its performance,

- osm2pgrouting for which I couldn’t find a lot of performance related suggestions and my question on GIS stackexchange remained unanswered

osm2pgrouting loads the whole OSM file (~ 160GB for North America) in memory, so if most of it ends up in swap space, it will take weeks, even if swap space is on SSD drive. On DS14 with its 112GB of RAM I could complete osm2pgrouting in 3 days.

osm2pgrouting import into #postgresql for all North America complete after just 3 days! on #azure DS14 #osm pic.twitter.com/REw8MRd97d

— Alex Bulankou (@bulankou) March 2, 2016

Premium Storage

What about storage — any way to save there? At first I considered getting something like D3 that has 200GB local SSD, that would be sufficient for North America OSM DB and I wouldn’t need extra storage. However the problem with local SSD is that is irrevocably lost with any hardware failure or when VM is resized. So this was not an option.

When choosing premium storage size I picked P30 (1GB) because anything below that (like P20) would have had less than 200MB/s transfer rate. After trying it out and looking with iostat tool I found that IO rate becomes bottleneck at this point and the price difference is not that significant.

Backup

I would like to be able to easily restore my database with OSM along with my custom indexes and tables on a different machine. This is in case I decide to scale out to process more queries simultaneously and ideally I wouldn’t want to wait 3 days for osm2pgrouting to complete. So this is what I found out worked best for me: backing up to Google drive using odeke-em/driveclient using pg_dump utility.

Neat! #googledrive from #linux shell: https://t.co/q6olYxFc3z by @odeke_et I used: https://t.co/VCOIT2iTcO Will use it for daily db backups.

— Alex Bulankou (@bulankou) March 4, 2016

Frontend — using the right tool for the job?

So given that Linux server was required for PostgreSQL, the cheapest approach for frontend would have been to go with PHP or Node.JS.

However I have experience in neither of them, so speed things up I went with the sub-optimal, but known path: ASP.NET, which allowed me get things done much faster, but the architecture ended up like this:

I’m using ASP.NET on Azure Web App to host my web server and calling PostgreSQL using Npgsql provider. Also because I am addicted to LINQ to SQL (which doesn’t exist for PostgreSQL) and just plain lazy, I have another MSSQL db for non-GIS persistent data. Overall my dependency on Microsoft stack is costing us extra $80 per month. That is ok for now, but going forward we will definitely be switching to hosting web server on Linux box and now ASP.NET Core on Linux may be the best option for this migration.

For Web Application I had our site initially running on B1, but memory is the main bottleneck as I am keeping some of the datasets (like list of all cities, list of all exits, routes for active sessions) in memory. The lesson that I learnt is that when memory pressure is primarily introduced by such pre-cached datasets, scaling up, not scaling out is the right approach.

CDN — a must for customer-facing sites

And finally, this one is a non-brainer and the cost is insubstantial. CDN, along with other optimizations helped to significantly reduce page load time.

Reduced https://t.co/TKShM70np0 PLT from 11 seconds to 6 seconds on slow 3G, thanks to https://t.co/U4jn4K7OOq pic.twitter.com/ffKcmtOX7u

— Alex Bulankou (@bulankou) October 27, 2015

WebPageTest.org is a great tool to for client performance measurement and analysis.

And what’s this StopByStop you keep mentioning?

If you read that far you might be interested in what exactly we are trying to solve with OpenStreetMap and why — please read this and this.

The post originally was published on Medium and has been re-published on Geoawesomeness. You can follow Alex on Twitter @bulankou and on Medium.